ETL Pipeline Overview

A Brief Overview of My ETL Pipeline

Building an ETL Pipeline: Engineering an App for Gen Z Readers

Building a book cataloging app for Gen Z readers is quite the challenge, as existing book databases aren't exactly ideal for my use cases or a match for my audience. These databases value quantity over quality, opting to record every known work no matter how little data there is for it.

Academic texts, outdated reprints, and low-quality metadata dominated search results, burying the trendy, engaging books my users would care more for.

My solution? Build a sophisticated ETL pipeline that efficiently filters, validates, and prioritizes book metadata to curate a high-quality catalog tailored to modern readers.

The Dilemma: Quality vs. Quantity

Most book APIs and databases operate on a "more is better" principle, indexing everything with an ISBN. However, for a book cataloging and social platform app, this creates noise—and lots of it at that.

Users searching for fantasy novels wouldn't want:

- Academic study guides

- Pamphlets or workbooks

- Poorly digitized reprints from the 1800s

- Books with missing cover images or descriptions

cluttering their results.

What I Needed

I needed a system that could:

- Intelligently filter incoming book metadata based on quality and relevance

- Deduplicate the same intellectual work across multiple editions and formats

- Prioritize books that appeal to my target demographic (Gen Z readers)

- Handle errors gracefully when processing thousands of records

- Scale efficiently as the catalog grows

Architecture Overview

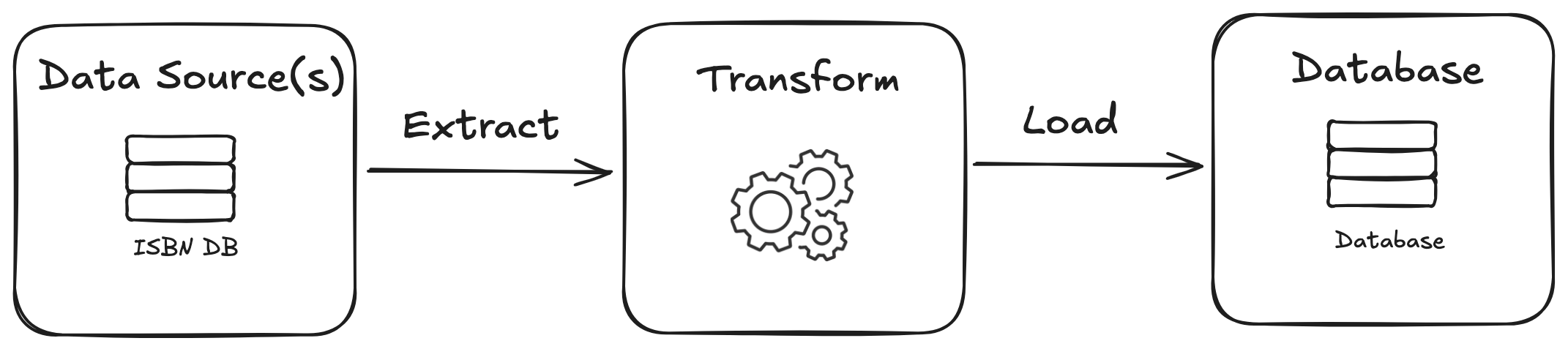

The pipeline follows a classic ETL pattern with some modern twists, built around TypeScript and PostgreSQL:

Extract → Transform & Validate → Quality Scoring → Load

Extract: On-Demand Data Sourcing

Instead of batch-importing entire catalogs, I opted to trigger the pipeline reactively.

The approach: When a user searches for a book not in our database, a worker agent fetches metadata from ISBNdb and queues it for processing. This keeps the catalog fresh and user-driven rather than bloated with irrelevant content.

I do intend to populate the database with some seed data (popular, trending books) to offload some of the initial demand for certain books. I'm sacrificing instant delivery for a much more curated, selective catalog. Over time, as more books are added to the database, that initial drawback would solve itself.

Transform: Multi-Layer Data Cleaning

The transformation layer handles the messy reality of book metadata through several specialized components:

Text Normalization — Cleans author names, handles various title formats, and processes synopsis text (including HTML entity decoding and case normalization). Dates were another headache that required proper cleaning and validation. Some books had no dates attached to them, so I had to give them a default date. Some were recorded as DD/MM/YYYY while others were YYYY/MM and so on. There were a lot of edge cases I had to account for and needed to test individual components rigorously to ensure a robust and reliable system.

Work vs Edition Modeling — A key architectural decision that separates intellectual content ("Harry Potter and the Philosopher's Stone") from specific manifestations (hardcover, paperback, ebook editions). This prevents duplicate works from cluttering search results while preserving format-specific metadata.

Publisher Canonicalization — Maps variant publisher names to canonical forms and detects imprint relationships, crucial for quality assessment and classification needs.

Language Detection — Uses pattern matching and common word analysis to filter non-English content, essential for my target audience. Why did I need this if there's a language field and I'm only searching for books with an "en" field? Simply because non-English data still manages to sneak into the API responses. I've gotten full works recorded in French that are recorded as "en" books. You'd think the database I pay for would ensure problems like this don't arise, but this is the reality I face.

One note I'm going to reiterate: This provides a very high-level overview of the entire pipeline I've built, and I won't dive into any particular individual component just yet. Each part had its share of notable challenges I had to overcome, and for that, they deserve their own space.

Load: Intelligent Quality Gate-Keeping

Before any book enters the database, it must pass through a configurable quality scoring system that acts like a rubric for the data.

Another way to think of this: Consider my database as an exclusive nightclub and my quality scorer as the bouncer. I don't just want anyone entering my club, and the bouncer will ensure that no one problematic gets inside. A biased system by design.

Quality Scoring Criteria:

- Synopsis quality and length — meaningful descriptions over any generic text

- Cover image availability — essential for visual discovery (I hate to see books without an available cover)

- Publisher reputation — major publishers vs. print-on-demand services are a priority here

- Publication recency — special consideration for classics (they are by definition timeless and a must-have)

- Metadata completeness — ISBN, page count, etc. I want rich data

Books scoring below a configurable threshold (example: 60/100) are rejected and logged for analysis. This filter dramatically reduces low-quality entries while preserving legitimate classics and quality publications.

This is an important part of my design as I'm catering to a specific audience. I don't need to account for study guides or books with very limited data. Works with no images, synopsis, or limited information in general aren't ideal.

Error Handling and Reliability

Processing book metadata at scale requires robust error handling. The system implements several reliability patterns:

Note: I'm well aware I'm not at the stage of stressing over scaling issues, but starting with a good foundation for these kinds of things will ease the migraines in the foreseeable future.

Transaction-Based Batching — Books are processed in configurable batches within database transactions. If any book in a batch fails, the entire batch rolls back, preventing partial state corruption.

Graceful Degradation — Missing optional fields don't stop processing. The system validates required fields (title, authors, ISBN) but gracefully handles missing publisher info, cover images, or publication dates.

Comprehensive Logging — Rejected books are logged with detailed reasons, creating a feedback loop for improving quality criteria and identifying data source issues. This component I appreciate the most since I often find myself coming back to rejection logs, scouring through raw data and their rejection reasoning as a means to fine-tune my quality scoring system.

Key Engineering Decisions

Several architectural choices reflect the specific challenges of building for book discovery:

Configurable Quality Thresholds — Rather than hard-coding quality rules, the scoring system accepts configuration objects. This allows A/B testing different quality bars and adapting to user feedback without code changes.

Preferred Edition Selection — When multiple editions of the same work exist, an algorithm automatically selects the "preferred" edition based on factors like cover image availability, ISBN validity, and binding quality. This reduces decision fatigue for users while maintaining data richness. Editions with the richest data get prioritized as the poster child for the app.

Modular Processing Pipeline — Each transformation step is isolated, testable, and upholds the principle of separation of concerns. Language detection, publisher mapping, and quality scoring can be modified independently, enabling rapid iteration on quality criteria.

Results and Impact

The pipeline successfully filters incoming book data, typically rejecting 40-60% of records from the source API based on quality criteria. This aggressive filtering means users see fewer, but more relevant and appealing books in their discovery experience.

The reactive sourcing approach keeps the database lean and user-focused rather than becoming a comprehensive but unwieldy catalog. Books enter the system because users actually searched for them, not because they happened to exist in a source database.

What's Next For Me?

This overview covers the high-level architecture and key decisions. In upcoming posts, I'll dive deeper into specific components such as:

- Genre Ontology and Mapping — How I built a flexible system for categorizing books beyond simple genre tags

- Publisher Canonicalization — The fuzzy matching and imprint detection algorithms that clean messy publisher data

- Quality Scoring Deep Dive — The specific criteria and weights that determine book quality for Gen Z readers

- Performance Optimization — How batch processing and database design choices affect pipeline throughput

This ETL pipeline demonstrates how thoughtful engineering can solve domain-specific problems. By understanding my users' needs and building quality gates accordingly, I created a system that prioritizes discovery over completeness—exactly what a modern book app needs.